Kubernetes or K8s is a cloud-native, portable, open-source container management system and a computing platform designed to automate the deployment of applications. Rising from the need to effectively coordinate the complexity that came with the use of containers, Kubernetes was established, which has today become a popular tool for the orchestration of containers in the modern cloud context.

Origins and Evolution

Birth of Kubernetes

Kubernetes is an open-source system that was originally designed by Google, and built upon their own internal framework called Borg. It was closed until 2014, and then the source code was released to let third-party developers draw from its promising features.

Evolution of Container Orchestration

Containers prior to Kubernetes were not able to be managed at large scale as efficiently as they are now. Thanks to innovations like Docker Swarm and Mesos they existed but none of them answered the necessity and versatility of the rising Kubernetes solution.

Key Concepts of Kubernetes

Kubernetes operates based on several key concepts:

| Containers | Containers are a type of versatile micro-encapsulation which incorporate applications and all necessary components into lightweight and fully autonomous structures. As mentioned, Kubernetes relies on the concept of containers to bundle up an application and all related resources or components. |

| Pods | In Kubernetes pod is the basic unit of deployment and it is a process that runs one or more containers that are tightly bound by lifecycle that must always run on the same node. |

| Nodes | Pods are individual instances where an application can be run and these exist on nodes which are individual physical or virtual machines that Kubernetes utilizes.The principal of virtualization like containers is utilized on each node. Nodes, on the other hand, are purely computational components that contain the services necessary for running pods, all of which are under the control of the Kubernetes control plane. |

| Clusters | Kubernetes cluster is the pool of hosts, each with own operating system, running containerized applications where hosts are controlled through Kubernetes control plane. They can be across multiple nodes, and the Cloudera system is built to be very redundantly available. |

Benefits of Kubernetes

| Scalability | With Kubernetes, applications can be spread out and load balanced easily as per the specifics of the users; if there is a surge in activity, more resources will be provided automatically to handle the extra load. |

| Portability | This makes applications portable by running from physical servers or VMS within data centers to cloud based platforms such as through Kubernetes ensuring flexibility. |

| Resource Efficiency | Kubernetes provides an effective approach for sharing computer and data storage resources and networks while boosting resource efficiency. |

Kubernetes Architecture

Master Node

It will be noted that the master node is another term for the head node or central control point of the Kubernetes cluster. Some of these pod include kube-apiserver, kube-controller-manager, kube-scheduler, and etcd that has the role of managing the state of the cluster and coordinating functionalities.

Worker Nodes

Finally, the worker nodes, also called minions, are the nodes that always execute pods and take care of the actual application workloads. Each working node applications of the kubelet, reporting to the master node, as well as the kube-proxy that regulates communication between nodes.

kubelet, kube-proxy, and etcd

Specifically, the kubelet is a node agent that monitors and starts containers that exist inside a pod, based on configuration. Kube-proxy – Here each worker node arranges network proxying: pod corresponds between pods using services. Etcd is a distributed key-value store where configuration of the cluster and the current state of the managing nodes are stored.

Getting Started with Kubernetes

Installation

To use k8s, the most common operations are the installation of clusters and creating a Kubernetes cluster with the help of kubeadm, Minikube, and others, or using the managed Kubernetes services from popular cloud platforms, Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), and Microsoft Azure Kubernetes Service (AKS).

Basic Commands

Kubernetes makes it possible for the user to manage the clusters using kubectl, which is the command-line interface used for Kubernetes clusters. Some of the well-known commands are product, pod, deployment, and services, among others, used to launch and control resources in the application environment.

Kubernetes in Practice

Deployments

The deployment is a Kubernetes object that is used to manage application containers or pods and control the number of containers that are needed. This allows users to describe in a declarative manner how they want an application to look like and Kubernetes will reconcile what it has to what is supposed to be.

Services

Services give pods connection an interface with which they are able to connect with other pods and resources within the cluster as well as to resources outside the cluster. Originally, there are three categories of services in Kubernetes, namely ClusterIP, NodePort and LoadBalancer.

Ingress

An Ingress is a Kubernetes resource that utilises rules to control the access of services from outside of the cluster. It allows defining the routing rules and configuring Http and Https load balancing for applications.

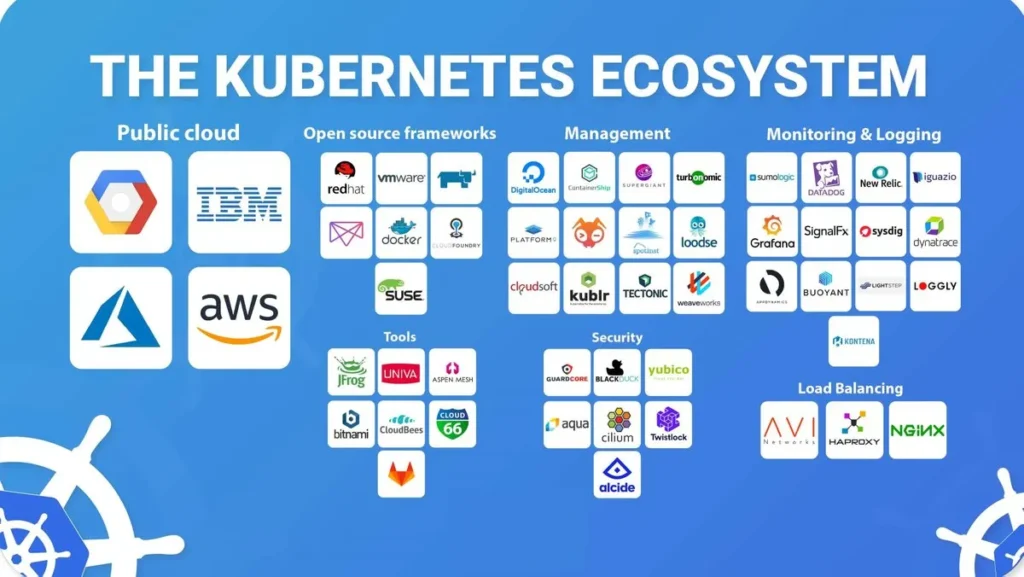

Kubernetes Ecosystem

Helm

Helm is an extension of Kubectl which is a tool used to manage the Kubernetes environment and applications The Helm works as a package manager in Kubernetes environment and helps in simplifying the way new applications are deployed, maintained and scaled. It let’s users deploy Kubernetes resources through charts that he has packaged and shared with others.

Operators

Operators represent a strategy in terms of packaging, deployment, and even management of several Kubernetes applications. They employ custom resources and controllers to perform rote operational process, for example, database creation and management of configurations.

Istio

Istio is an open-source tool that offers multilayer functionalities like service management, network security, and taking care of the microservices running on Kubernetes. It lets users manage traffic and apply rules for security and reliability when the services are opened for the users.

Challenges and Considerations

Complexity

That said, Kubernetes provides incredible possibilities: it is a flexible tool, but its intricacy is somewhat intimidating for a novice user. Therefore, organisations may require to dedicate resources both in training and staff development to be able to fully harness the potential of Kubernetes.

Learning Curve

Kubernetes, which is pushing toward version 1.0, is still difficult for new users mostly because of their unfamiliarity with containers, orchestration and distributed systems. However, the challenge of inadequate technical support can be offset by access to resources like documentation, tutorials, and social forums.

Security

This is because it offers several accessibility points and is usually within a private network, so the security of Kubernetes clusters depends on password protection, identification of user roles, restrictions on network access, and other measures. Clustering is mandatory to make best practices of an organization and updated regularly in order to overcome the security issues.

Innovation and Expansion

It is worth emphasizing that Kubernetes is still growing very actively, with constant further development and improvements made by the active open-source community. Some new features and capabilities are a subject to permanent addition in order to meet new and unforeseen applications and demands.

Community Involvement

One of the critical aspects of the Kubernetes ecosystem is that it is open-source software and has a large and active community that participates in decision-making for the platform’s future development. Employees from different locations actively participate as innovative forces, make changes in the documentation process, and help the global audience.

Kubernetes vs Docker

Kubernetes and Docker are generally associated together as they both are technologies used within the containerization process although, they operate in quite different ways. Docker acts as an operating platform for applications in containers, building, shipping, and running. It provides mechanisms for creating containers image, container lifecycles, ensuring consistent application deployment across diverse environments.

On the other hand, Kubernetes runs as a container scheduling service, which manages or automates tasks such as deploying the application, scaling, or controlling it. With functionalities including service discovery, load balancing mechanisms, and self-organizing scalability, Kubernetes was designed for managing small yet complex and interconnected applications across several nodes.

Docker focuses on building and creating the individual containers, while Kubernetes is designed specifically to manage the growing volume of these containers. In other words, Docker essentially gives developers the tools to build and run containers and Kubernetes offers the means to handle and orchestrate these containers on a production level. They are both highly compatible as tools within the container ecosystem; while Docker enables the simplified creation of containers, Kubernetes simplifies the orchestrated management of the containers.

Kubernetes Certification

Certification has become crucial in the Information Technology field, especially Kubernetes, as the use of containers has become a standard platform for the orchestration of microservices. These certifications believe an individual skill and effectiveness in implementing, administering and safeguarding Kubernetes clusters, and are therefore of great importance for BOTH the individual and organizational entities.

There are several vendors available offering certifications specifically in Kubernetes and these are grouped in the following categories; Core Kubernetes certifications, Kubernetes application developer certifications, Kubernetes advanced cluster administrator certifications, and Kubernetes security specialist certifications.

The certifications may span over areas of study such as the architecture of Kubernetes, the installation, and configuration of Kubernetes, deployment of application, network, security, and competency in basic to advanced problem solving techniques. There is usually an assessment by examination, simulated practical assignments, and realistic exposures of the concepts.

The testing body for Kubernetes has its certifications, but the most popular one from the Cloud Native Computing Foundation (CNCF) is the Certified Kubernetes Administrator (CKA). The CKA exam tests an individual’s proficiency in regard to basic Kubernetes operations, akin to setting up a cluster or solving problems, utilizing the command-line interface.

The second most selected certification is the Certified Kubernetes Application Developer (CKAD), which is provided by CNCF. The CKAD exam checks a person’s ability to design, build, and deploy applications that are cloud native on Kubernetes clusters. They include acts like creating application containers, or pods, and services alongside the manipulation of Application workloads.

Other general Kubernetes certifications also exist but there are still specialized Kubernetes certifications that are tailored for a certain field or occupation like cybersecurity, networking, or development.

Altogether, the Kubernetes certification is a great opportunity for IT specialists to prove their competencies in one of the most influential technologies of today to expand the available sphere of work offers and guarantee companies’ effective launching and further functioning on the basis of Kubernetes.

Conclusion

In conclusion, Kubernetes has revolutionized the way organizations deploy, manage, and scale containerized applications. Its robust architecture, rich ecosystem, and vibrant community make it the platform of choice for modern cloud-native development. While challenges exist, the benefits of Kubernetes far outweigh the complexities, paving the way for a future where applications are built and operated more efficiently and reliably.

FAQs

Q: What are some alternatives to Kubernetes?

A: It is essential to know that there are other platforms that are in the same league with Kubernetes; they are Docker Swarm, Apache Mesos, and Nomad. All of the above reported platforms have their own advantages and utilities and they are designed for different sorts of purposes.

Q: How does Kubernetes handle scaling?

A: Kubernetes features Horizontal pod autoscaler (HPA) which is one of the techniques that Kubernetes deploys for scaling up the pod quantity in a deployment based on the CPU utilization and other metrics. This makes it possible for applications to operate at different loads or intensity without adjustment or input from the user or developer.

Q: How does Kubernetes handle scaling?

A: In this case, Kubernetes is most often used in production environments, but it can also be deployed in small projects or, for example, during development. Platforms such as minikube and microk8s are available whereby Kubernetes is provided in manageable clustered environment for testing purposes.

To Checkout our latest articles related to Cyber Security Click Here